1. What are Small Language Models (SLMs)?

Before diving into running Phi-2 locally, let’s take a moment to understand the concept of small language models (SLMs) and their significance in natural language processing (NLP). A SLM is a type of AI model that has been trained on a massive dataset of text but is limited in terms of its size and capabilities compared to a Large Language Model (LLM). SLMs are designed to be more lightweight and efficient, making them suitable for various applications, including chatbots, language translation, and content generation. SLMs are much smaller than LLMs, with fewer parameters and a smaller dataset, so they have a lower computational cost, making them more suitable for edge or resource-constraint devices.

2. What is Phi-2?

Phi-2 is the latest model in the Phi series of small language models (SLMs) that aim to break the conventional scaling laws of language models. Unlike large language models (LLMs) that require massive amounts of data and compute resources, Phi models are trained on a mixture of web-crawled and synthetic “textbook-quality” data, following the idea of Textbooks Are All You Need . Phi models also leverage innovations in model architecture, optimization, and data augmentation to achieve remarkable performance on various benchmarks. 😀

Phi-2 is twice as large as its predecessor Phi-1.5, and was trained for two weeks on a cluster of 96 A100 GPUs. It demonstrates outstanding reasoning and language understanding capabilities, showcasing state-of-the-art performance (for LLMs <13 billion parameters).

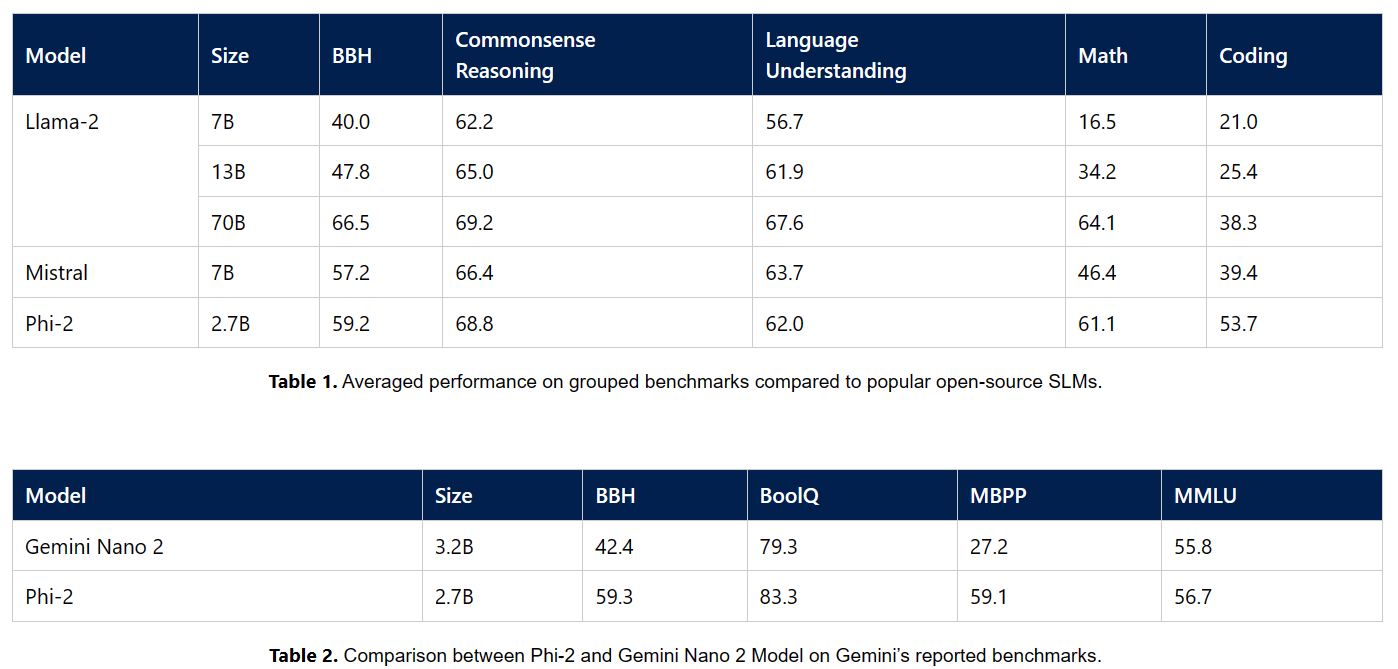

As shown in the figure below on complex benchmarks, Phi-2 matches or outperforms models up to 25x larger, such as Llama-2 and Gemini Nano. Phi-2 is also an ideal playground for researchers, as it allows for exploration around mechanistic interpretability, safety improvements, or fine-tuning experimentation on various tasks. Phi-2 is available in the Azure AI Studio (via the model catalog ) and on Hugging Face.

2.1 Phi-2’s Capabilities

What better way to show this other than Some prompts that Phi-2 can handle:

Creative Writing:

- 🗒️Prompt: “Write a short story about a time-traveling computer geek who discovers a secret algorithm book with the power to change computing history.”

- 🤖 Phi2-Generated output: “In the dimly lit archives of the ancient library, Amelia stumbled upon a dusty tome unlike any other. Its pages whispered secrets of forgotten civilizations and whispered promises of altered destinies. As she traced her fingers over the delicate script, the room seemed to blur, and suddenly, she was standing in the heart of Renaissance Florence. The air smelled of ink and ambition. The book had worked its magic, and Amelia knew she held the fate of empires in her hands.”

Philosophical Reflection:

- 🗒️Prompt: “Discuss the concept of free will versus determinism.”

- 🤖 Phi2-Generated output: “Free will and determinism have long been at odds in philosophical discourse. Some argue that our choices are predetermined by external factors—genetics, upbringing, societal norms—while others champion the idea that we possess true agency. Perhaps the truth lies in the delicate dance between the two. We navigate life’s labyrinth, sometimes guided by fate, other times forging our own path.”

Educational Explanation:

- 🗒️Prompt: “Explain the process of photosynthesis in simple terms.”

- 🤖 Phi2-Generated output: “Photosynthesis, the magical dance of plants and sunlight, is how they create their own food. Imagine tiny chlorophyll factories in leaves, capturing sunlight like solar panels. They convert that energy into chemical fuel—glucose—while releasing oxygen as a byproduct. So next time you breathe in that fresh air, thank a leaf for its photosynthetic prowess!”

Humorous Scenario:

- 🗒️Prompt: “Describe a day in the life of a mischievous squirrel.”

- 🤖 Phi2-Generated output: “Meet Nutty, the squirrel extraordinaire. His mornings begin with acorn heists, followed by elaborate treehouse construction. He’s the neighborhood’s unofficial alarm clock, chattering loudly at dawn. But his pièce de résistance? Prank-calling the crows, convincing them they’ve won a lifetime supply of shiny objects. Nutty’s motto: ‘Life’s too short not to be a little nuts.’”

3. Small Language Models (SLMs) vs Large Language Models (LLMs)

Large Language Models (LLMs) are a type of AI model that is much larger and more powerful than SLMs. LLMs have hundreds of billions of parameters and are trained on massive text datasets. This gives LLMs the ability to handle complex tasks, such as language generation, translation, and question answering, with high accuracy and fluency. However, LLMs also have some disadvantages. They are larger, making them more expensive and slower to train. They also have a higher computational cost, meaning they may require access to specialized hardware.

On the other hand, SLMs, as we called out, are smaller and more lightweight than LLMs, making them more efficient and cost-effective in training computing resources and inference. While it might seem that SLMs are also more suitable for edge or resource-constrained devices, such as mobile phones or IoT devices, they are small compared to LLMs but still require significant computational resources to run. Phi-2, for example, still has 2.7B parameters, and while it can make inferences on a CPU, it is very slow and impractical for real-time applications. One would need a GPU or a cloud-based service for any realistic use case.

3.1 When to use SLM vs LLM?

Firstly, neither model is inherently better - the choice between an SLM and an LLM depends on the specific application and requirements. SLMs are a good choice when size, cost, and speed are important considerations. LLMs are a better choice when high performance and complex capabilities are required. If a task at hand is quite narrow and in one of the supported languages, then SLMs might be good. However, for a given task, an SLM may be sufficient, but an LLM may be necessary for more complex tasks or tasks requiring high accuracy and fluency.

Furthermore, it is key to understand that it is not necessarily about the number of languages understood but rather the depth and nuance with which each model can understand and generate language. SLMs are designed to be efficient and effective within their scope, which may include a wide range of languages. LLMs like GPT-4, due to their size and complexity, often can understand and generate text in a larger number of languages and with greater nuance.

The choice between an SLM and an LLM would again depend on the specific requirements of the task, including the languages involved and the level of language understanding and generation needed. Using a combination of SLMs and LLMs is common to achieve the best results for a given application.

4. Running Phi-2 locally

On one hand, running this is simple if you just don’t want to program anything and only want to use the model. The easiest option in this case is to use [LM Studio , a web-based platform for running language models. You can use the Hugging Face API to download and run the model.

We use a simple console chat example that runs locally. We use the Hugging Face Transformers library to generate text based on user input. The user can generate a story, a haiku, or a joke on a topic of their choice. Here is how to run it locally on a Windows machine - the same should apply to a Mac or Linux machine.

The full code is below, but here are the key aspects to grok when running Phi-2 locally.

- The key is to use the

AutoModelForCausalLMandAutoTokenizerclasses from thetransformerslibrary to load the Phi-2 model and tokenizer. - We then use the

generatemethod to generate text based on a user prompt. Thegeneratemethod takes the user prompt as input and returns the generated text - We use the

from_pretrainedmethod to load the model and tokenizer from the Hugging Face model hub. - We also use the

save_pretrainedmethod to save the model and tokenizer to a local directory. This allows us to load the model and tokenizer from the local directory if they are already saved, which can help save time and resources.

The following code snippet is what loads the model and the tokenizer:

# Download the model and tokenizer from Hugging Face

model = AutoModelForCausalLM.from_pretrained("microsoft/phi-2",

torch_dtype="auto",

trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained("microsoft/phi-2",

trust_remote_code=True)

And the following is where we encode the user input and call the generation. First, we create tokens of the user prompt; the resulting tokens are returned as PyTorch tensors. Then, the model generates text based on the tokenized input. We cap the tokens to a maximum of 500 tokens, and the end-of-sequence token is used for padding if necessary. Finally, the generated tokens are decoded back into human-readable text.

inputs = tokenizer(prompt,

return_tensors="pt",

return_attention_mask=False,

add_special_tokens=False)

outputs = model.generate(**inputs,

max_length=500,

pad_token_id=tokenizer.eos_token_id)

text = tokenizer.batch_decode(outputs)[0]

The full code is below. The code is a simple console chat example that runs locally. The user can generate a story, a haiku, or a joke on a topic of their choice.

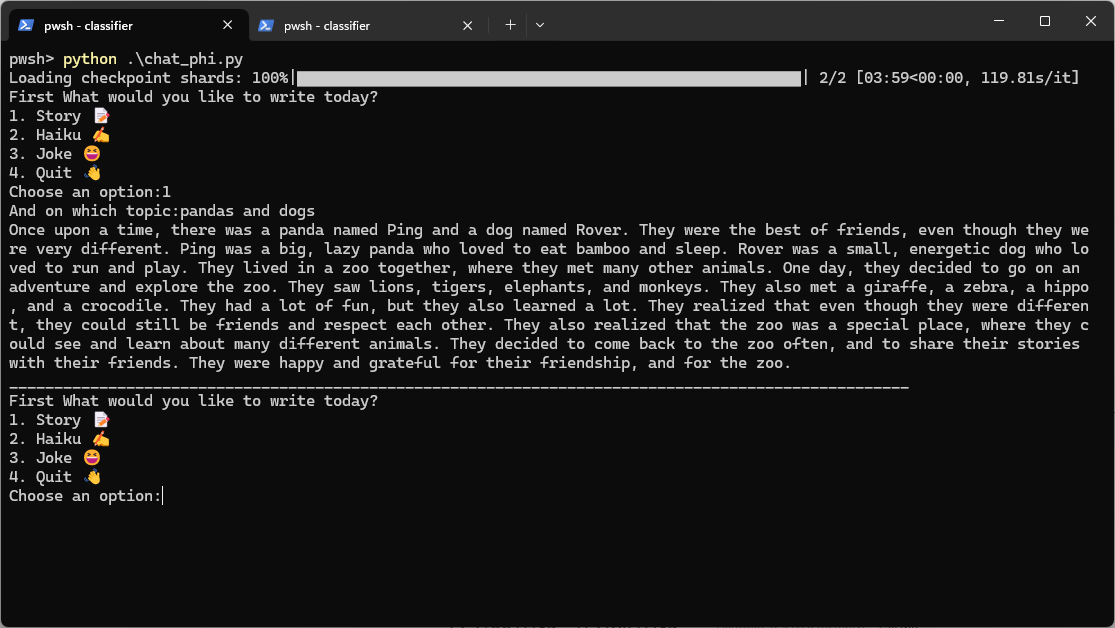

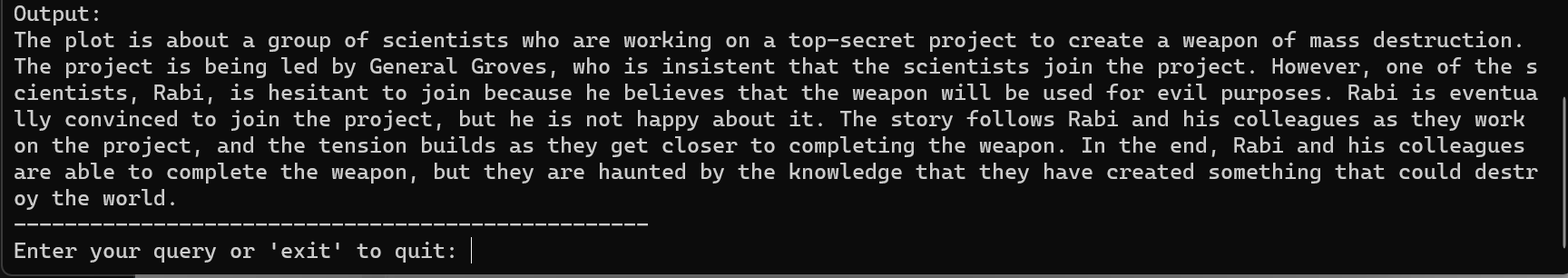

Some examples of what Phi-2 can generate using the above code are shown below. The first is a story about pandas and dogs.

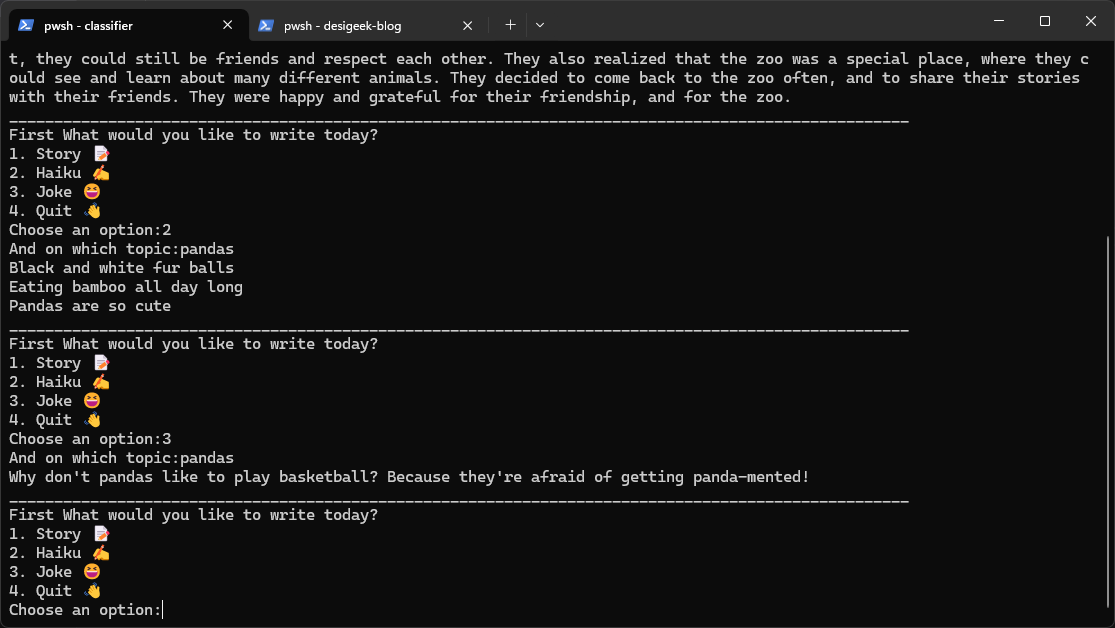

Here is another example of a Haiku and a Joke generated by Phi-2 on Pandas.

Switching gears, let’s look at how we can implement the RAG using Phi-2.

4.1 Running Phi-2 Locally - Full Code

The following code is the complete code that executes the examples we showed before for running Phi-2 locally. This can work on a CPU, but it is very slow, and a good GPU is strongly suggested.

| |

5. Implementing Retrieval-Augmented Generation (RAG) with Phi-2

RAG is a powerful technique that combines the strengths of retrieval-based and generation-based approaches to natural language processing. RAG is one of the ways one can get proprietary information and knowledge to the model and use it as part of the prompt. It leverages a retriever to find relevant context passages and a generator to produce fluent and coherent responses. The retriever identifies relevant context passages, and the generator uses these passages to generate a response.

This approach allows RAG to produce high-quality, informative, and contextually relevant responses. In-context learning is a key feature of RAG, as it allows the model to learn from the context of the conversation and generate more accurate and relevant responses. This is particularly useful in scenarios where the model needs to understand and respond to complex queries or provide detailed information on a specific topic.

At a high level, the process of implementing RAG involves the following steps:

- Generate Embeddings with Phi-2:

- Use Phi-2 to encode your context passages (documents) and extract their embeddings.

- These embeddings will represent the semantic content of each passage.

- Create a Vector Index:

- Choose a vector index library or framework (such as Faiss, Annoy, or HNSW).

- Initialize an index structure to store the embeddings efficiently.

- Add the generated embeddings to the index.

- Save Embeddings to a Local Vector Database:

- Create a local database to store the embeddings.

- For each context passage, save its corresponding embedding in the database.

- You can use the passage ID or a unique identifier as the key for retrieval.

- Perform Similarity Search:

- When you receive a new context (query), encode it using Phi-2 to obtain its embedding.

- Use the vector index to perform a similarity search against the saved embeddings.

- Retrieve the most similar context passages based on cosine similarity or another distance metric.

- Return the relevant passages as results.

In our example, we will use the FAISS library to create a vector index and perform a similarity search. We will also save the embeddings to a local database for efficient retrieval. FAISS (Facebook AI Similarity Search) is a library developed by Facebook for efficient similarity search and clustering of high-dimensional vectors. It allows for a quick nearest-neighbor search over large datasets and supports CPU and GPU-based computations. FAISS is widely used in information retrieval, recommendation systems, and other applications that require similarity search.

5.1 Loading data for RAG and Phi-2

To implement RAG, we use the script from the Oppenheimer movie - which is quite new in that it is not in the Phi-2 training set and is available as a PDF. We will extract the script from this PDF, creating embeddings, which will then save the embeddings to a local database and perform a similarity search to retrieve relevant context passages based on a user query. We will use the FAISS library to create a vector index and perform a similarity search. We will also save the embeddings to a local database for efficient retrieval.

We use the PyPDF2 library to parse PDFs, a pure Python library for reading and writing PDF files. It can extract text, merge and split documents, and more. We will use it to extract the PDF text from the Oppenheimer movie script. The following code function shows how to read the PDF and extract the text. This is efficient for our use case, but it is not the most efficient way to extract text from a PDF when thinking about production scale, especially if the PDF has a lot of images and tables.

5.2 Generate embeddings using Phi-2

Now that we have the text, the following functions show how to create the embeddings using Phi-2. We read the text as a list of context passages and then use Phi-2 to encode each passage and extract its embedding using the encode method.

| |

Here are a few things that are going on:

- Given that we are using this for inference and not training, we use a

torch.no_grad()`, which tells PyTorch not to track, calculate, or modify gradients while executing code within this block. This helps us save the amount of memory needed. - Inside this block, the input_ids are fed into the model, and the output is stored in the output variable. The logits, which are the raw, unnormalized scores outputted by the last layer of the model, are then extracted from the model’s output.

- The logits are then processed to generate the embedding for the passage. The .mean(dim=1) method calculates the mean of the logits along dimension 1, which typically represents the sequence length in a language model.

- The .detach() method detaches the result from the computation graph so that no gradients will be backpropagated along this variable.

- The .cpu() method moves the tensor to the CPU if it’s not already there. Finally, the tensor is converted to a numpy array using the .numpy() method.

- The resulting embedding is then appended to the embeddings list, which contains the embeddings for all the passages.

5.3 Creating Vector Index

The following function shows how to create a vector index using the FAISS library and perform a similarity search to retrieve relevant context passages based on a user query. The create_index function initializes a flat index structure to store the embeddings and adds the embeddings to the index. The search_query function encodes the user query using Phi-2 to obtain its embedding and performs a similarity search against the saved embeddings to retrieve the most similar context passages.

def create_index(query_embedding):

index = faiss.IndexFlatL2(query_embedding[0].shape[1]) # Euclidean distance

faiss.normalize_L2(query_embedding)

# Add embeddings to the index

for i, item in tqdm(enumerate(query_embedding), total=len(query_embedding)):

if item.ndim == 1:

item = item.reshape(1, -1) # Reshape 1D array to 2D

index.add(item)

return index

The normalize_L2() function normalizes the vectors and is a crucial step when using Euclidean distance in high-dimensional spaces to ensure that the distance is not dominated by the dimensionality of the vectors. As we iterate through the embeddings, we check if the item is a 1D array and reshape it to a 2D array if necessary. This is important because FAISS expects the input to be a 2D array, and we need to reshape the 1D array to a 2D array before adding it to the index.

The function finally returns the created index. This index can then be used to perform efficient similarity searches.

5.4 Perform Similarity Search

The following function shows how to perform a similarity search using the vector index to retrieve relevant context passages based on a user query. As noted earlier, the most similar context passages are then retrieved based on cosine similarity or another distance metric.

The function starts by encoding the input query using a tokenizer and performs a similarity search on the FAISS index using the query embedding. It retrieves the indices of the top 3 most similar passages to the input query and then retrieves the corresponding context passages from the context_passages list. The similar context passages are then concatenated into a single string and passed to the Phi-2 model to generate a response.

def search_query(input_query, inputTokenizer, model, device, index, context_passages):

# Given a new query context, encode it and perform similarity search

input_ids = inputTokenizer.encode(input_query,

return_tensors="pt",

return_attention_mask=False,

add_special_tokens=False).to(device)

with torch.no_grad():

input_ids = input_ids.long()

output = model(input_ids)

logits = output.logits

query_embedding = logits.mean(dim=1).detach().cpu().numpy()

# Perform similarity search - top 3 similar passages

_, similar_indices = index.search(query_embedding, k=3)

# Retrieve context passages based on similar_indices

similar_contexts = [context_passages[i] for i in similar_indices[0]]

# Concatenate the similar contexts into a single string

context = ' '.join(similar_contexts)

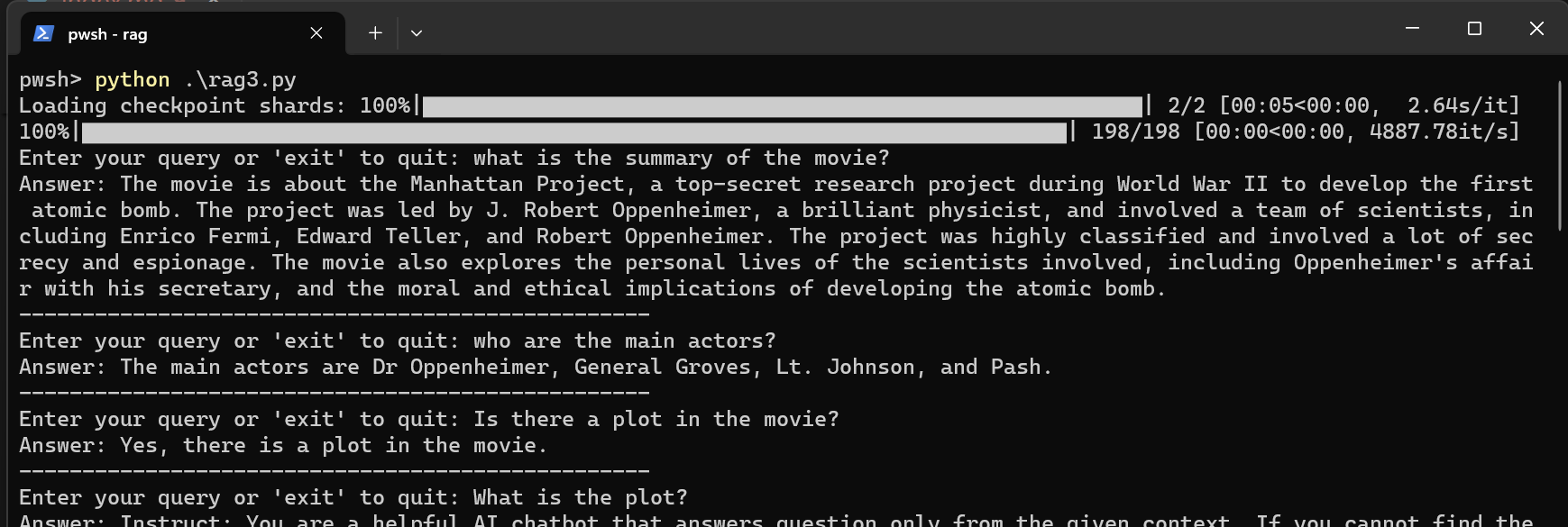

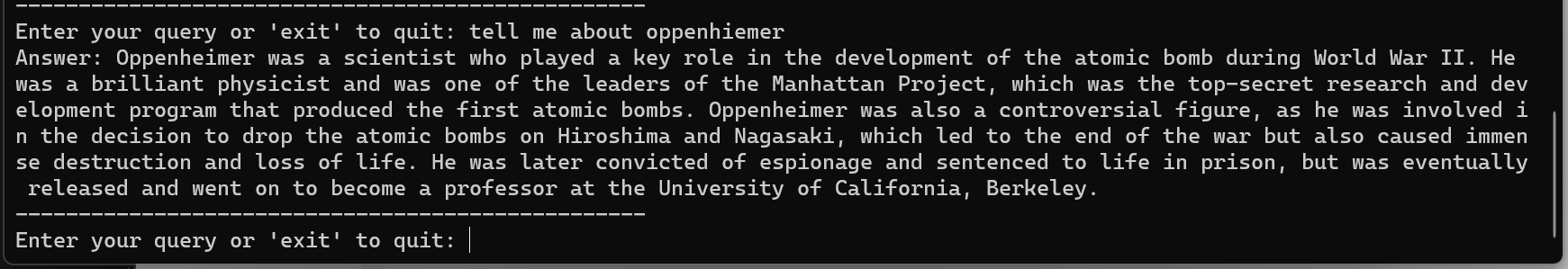

Let us run this and see how it works, as we discussed before. We will use the Oppenheimer movie script as the context passages and perform a similarity search to retrieve relevant context passages based on a user query. The next few figures show the output of us asking questions about the movie, where those pieces of information are not in the model but passed using the semantic search.

Now that we have seen the different elements, the code below brings everything together as a console app that one can run. The Oppenheimer script (pdf file) you need can be downloaded from here .

| |

Now let us switch gears and try something that pushes the ability of Phi-2.

6. Code generation example using Phi-2

If we want to push the boundaries of what Phi-2 can do, we can use it to generate code. Below is an example of using Phi-2 to generate code for a simple C function. 🤓

🗒️Prompt: “Write a program in C that implements a BPE-based tokenizer; it should implement both encoding and decoding functions. Think through this step by step.”

The code we see below is what was generated. At face value, it looks like a good start but is incomplete. It is a good starting point for a developer to continue from and shows the power of SLMs like Phi-2.

| |

⚠️ Note: It has been a while since I wrote C, but at a high level, these are some of the issues I can see with this; these issues are off the top of my head and are not exhaustive. Finally, it is not meant to test my coding capabilities. 😬

- Memory Allocation: The

mallocfunction is used without checking for successful allocation; if it returnsNULL, which is not checked, we will get hurt. - Tokenization Logic: The logic in the

encodefunction does not reflect the BPE algorithm, which involves merging the most frequent pairs of characters or bytes. - String Concatenation: The

strcatfunction is used incorrectly; instead of a null-terminated string (as part of the second argument), we get a pointer to a single character - Decoding Logic: The

decodefunction attempts to usestrcatwith a format string ("%x"), which is invalid. Thesprintffunction should be used for formatted strings.

Hopefully, this gives you a good understanding of SLMs, specifically Phi-2, and how to use them locally. 😍