It is 2021. And we have #AI writing #AI code. 🤪 It is quite interesting, but also can be quite boring once you get beyond the initial technology, and just think of it as one of the tools in your arsenal. And getting to that point is a good think.

As part of a think at work I recently started playing with GitHub Copilot

, which is using GPT3 to be your pair programmer – helping write code. GPT3 has multiple models

(called engines), and Copilot uses one of these family of engines called Codex. Codex is a derivative of the base GPT3 engine that is trained on billions of lines of code.

Using Copilot is quite simple; you install the Github Copilot extension,

and it shows up in your IDE (VSCode in my example). We need to make sure we decompose the problem we are trying to solve - we should not think of this as helping write the complete program or all parts; but as it can help with different functions and pieces of code. To do this, we need to tell it what we are trying to do - these are done via prompts (code comments). For GPT models, prompt engineering is quite critical, and would be worth getting to details and understanding.

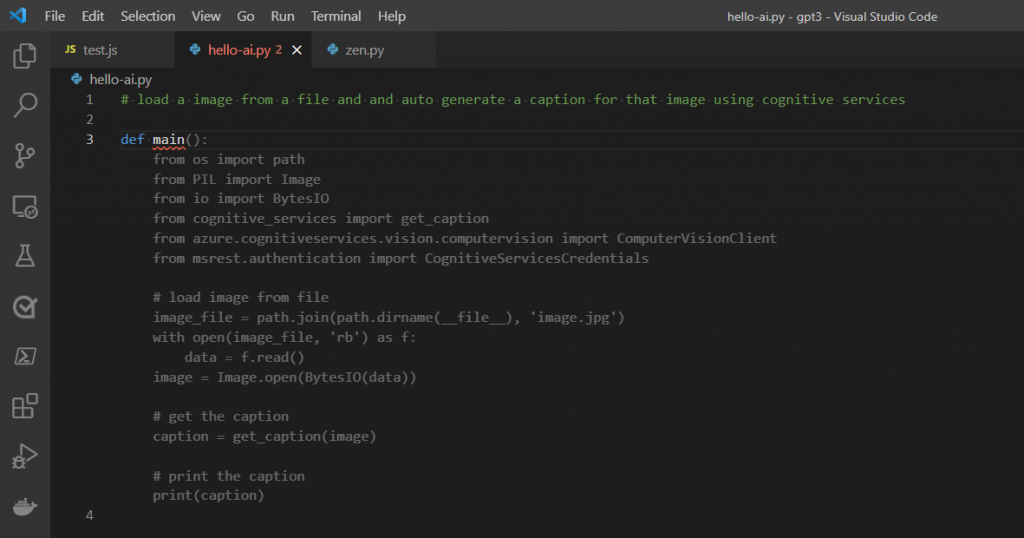

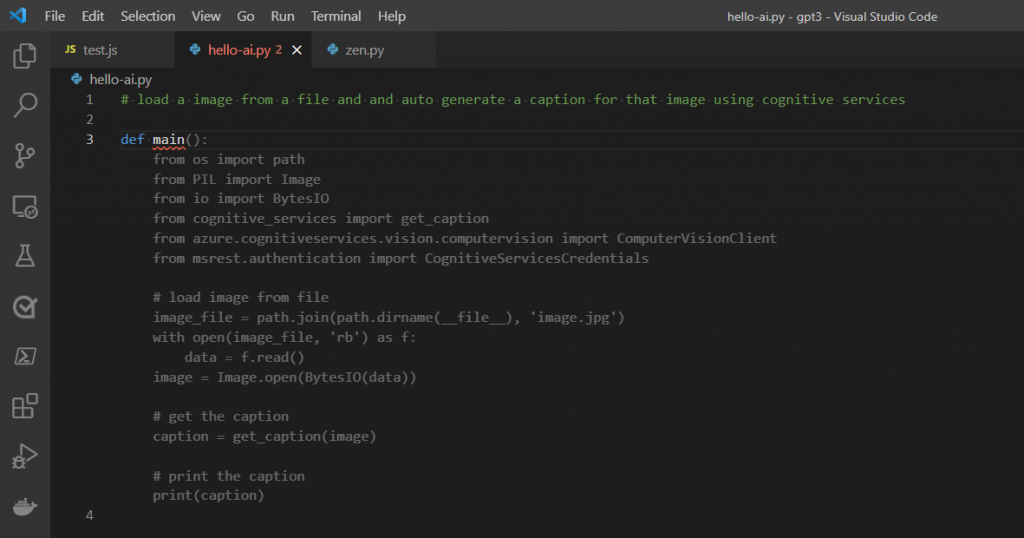

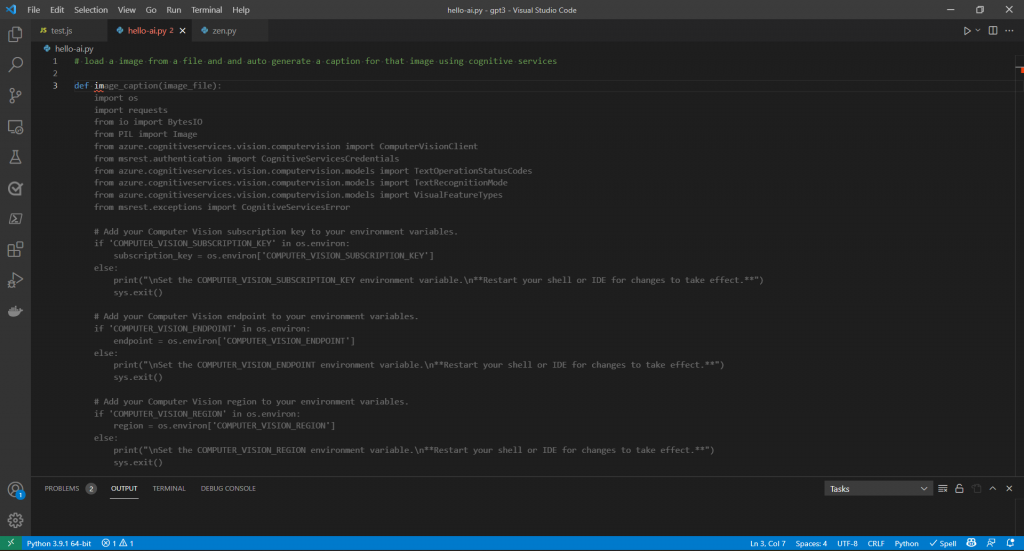

Starting simple, I create an empty python file and entered a prompt that outlines what I want to try and do. In this case as you can see in the screenshot below - I want to load an image from a file, and using our Vision Cognitive Services

, run an image analysis, and auto-generate a caption for that image.

I started typing the definition of a function, and Copilot (via the add-in) understands the prompt I outlined, and the context of the code on what I am doing. Remember Codex builds on the base GPT3 and does have all that NLU capability.

Taking all of this in, it suggests completing the function for me. In terms of using this as an end-user (i.e. the developer) - the suggested code shows up as auto-complete and you can see it in the grey color. If I like that suggestion, I press tab and have it added to the file.

In this case you can see how it is reading the file from disk, calling a function called get_caption() and printing the caption to the stdout (console in this example).

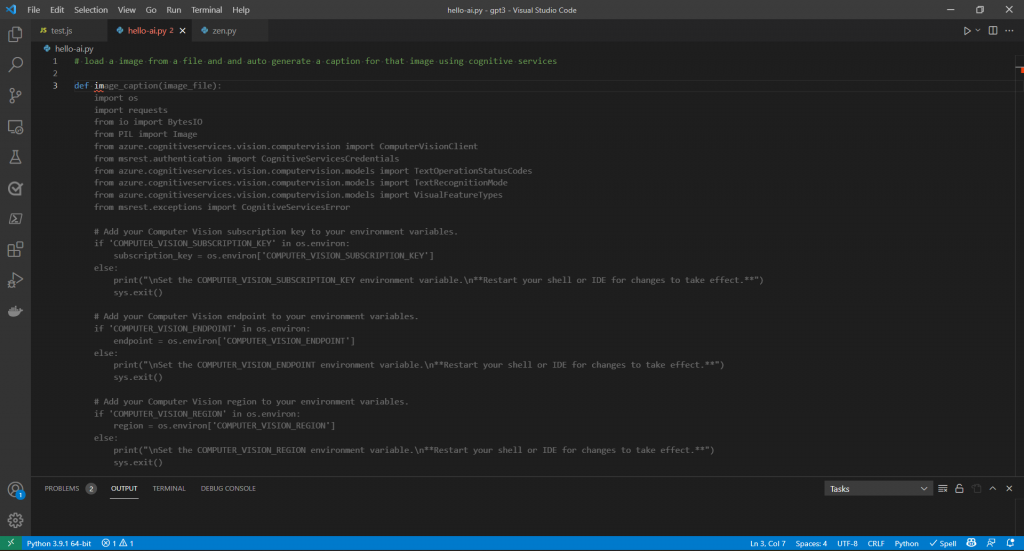

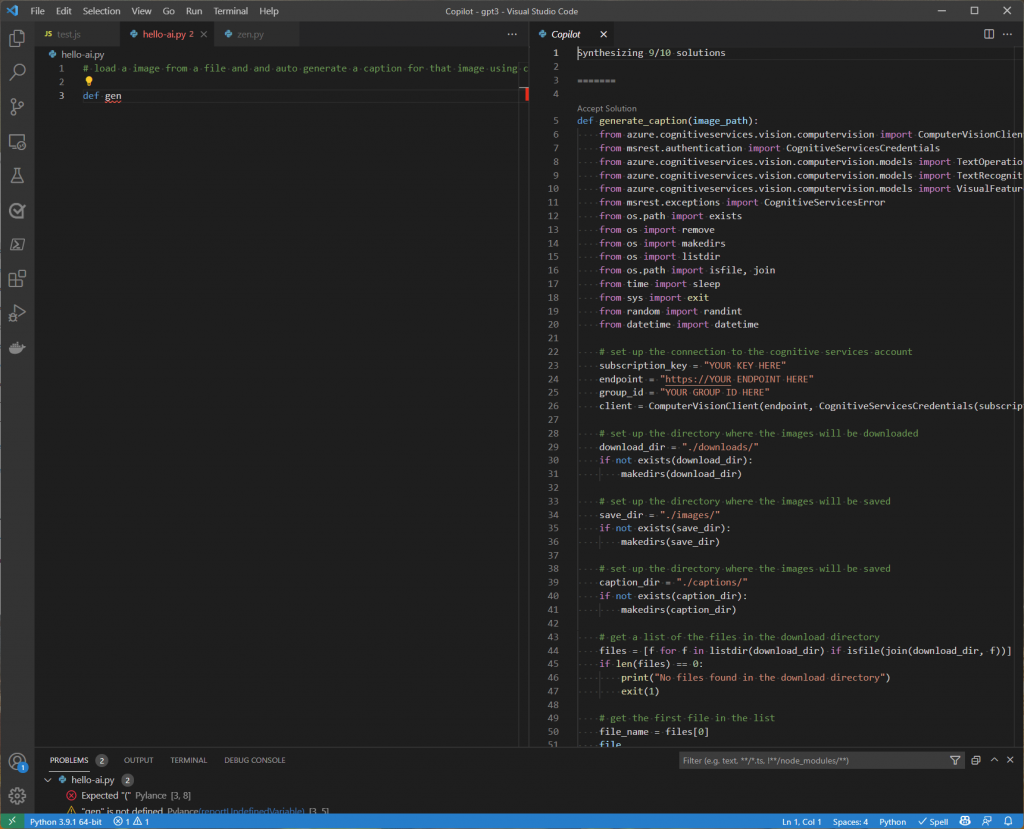

There is also an option to cycle through different suggestions and then pick another one as shown in the screenshot below.

This variant of the suggested code is creating a function called image_caption() which takes the path to the image file to load. This also expects other required things for the Vision cognitive service to work - such as the subscription key to authenticate, the API end-point details to call, etc.

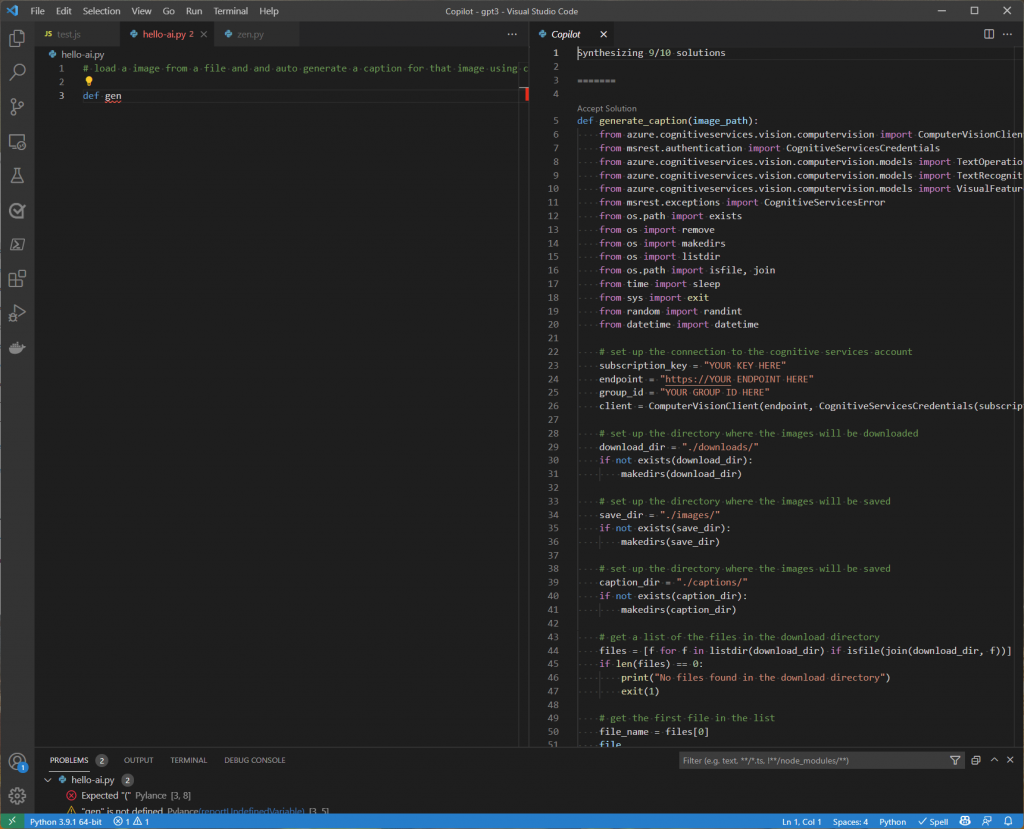

Typically, Copilot can synthesize up to 10 code options (Copilot calls these as Solutions), that one can cycle through and see if there is a better variant for the task at hand. The screenshot below shows this experience in VS Code.

The ask whilst simple, still involves a bit of code which needs to be written - reading from file, setting up the subscription details, and wiring that up to call this etc. And it is in these cases really where Copilot shines - it is your copilot picking up the ‘gunk work’ - freeing up your bandwidth, and cognition capacity on the more interesting and higher order bit of code and value to your business.

To get a flavor of our AI writing AI code, below is the full set of the nine suggestions the Copilot came back for what I was trying to do.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

| Synthesizing 9/10 solutions

=======

def generate_caption(image_path):

from azure.cognitiveservices.vision.computervision import ComputerVisionClient

from msrest.authentication import CognitiveServicesCredentials

from azure.cognitiveservices.vision.computervision.models import TextOperationStatusCodes

from azure.cognitiveservices.vision.computervision.models import TextRecognitionMode

from azure.cognitiveservices.vision.computervision.models import VisualFeatureTypes

from msrest.exceptions import CognitiveServicesError

from os.path import exists

from os import remove

from os import makedirs

from os import listdir

from os.path import isfile, join

from time import sleep

from sys import exit

from random import randint

from datetime import datetime

# set up the connection to the cognitive services account

subscription_key = "YOUR KEY HERE"

endpoint = "https://YOUR ENDPOINT HERE"

group_id = "YOUR GROUP ID HERE"

client = ComputerVisionClient(endpoint, CognitiveServicesCredentials(subscription_key))

# set up the directory where the images will be downloaded

download_dir = "./downloads/"

if not exists(download_dir):

makedirs(download_dir)

# set up the directory where the images will be saved

save_dir = "./images/"

if not exists(save_dir):

makedirs(save_dir)

# set up the directory where the images will be saved

caption_dir = "./captions/"

if not exists(caption_dir):

makedirs(caption_dir)

# get a list of the files in the download directory

files = [f for f in listdir(download_dir) if isfile(join(download_dir, f))]

if len(files) == 0:

print("No files found in the download directory")

exit(1)

# get the first file in the list

file_name = files[0]

file

=======

def generate_caption(image_path):

from os import environ

from azure.cognitiveservices.vision.computervision import ComputerVisionClient

from msrest.authentication import CognitiveServicesCredentials

from azure.cognitiveservices.vision.computervision.models import TextOperationStatusCodes

from azure.cognitiveservices.vision.computervision.models import TextRecognitionMode

from azure.cognitiveservices.vision.computervision.models import VisualFeatureTypes

from msrest.exceptions import CognitiveServicesError

# Add your Computer Vision subscription key to your environment variables.

if 'COMPUTER_VISION_SUBSCRIPTION_KEY' in environ:

subscription_key = environ['COMPUTER_VISION_SUBSCRIPTION_KEY']

else:

print("\nSet the COMPUTER_VISION_SUBSCRIPTION_KEY environment variable.\n**Restart your shell or IDE for changes to take effect.**")

sys.exit()

# Add your Computer Vision endpoint to your environment variables.

if 'COMPUTER_VISION_ENDPOINT' in environ:

endpoint = environ['COMPUTER_VISION_ENDPOINT']

else:

print("\nSet the COMPUTER_VISION_ENDPOINT environment variable.\n**Restart your shell or IDE for changes to take effect.**")

sys.exit()

# Create an authenticated Computer Vision client.

client = ComputerVisionClient(endpoint, CognitiveServicesCredentials(subscription_key))

# Open the local image file.

with open(image_path, 'rb') as image_file:

# Read the image into a byte array.

image_data = image_file.read()

# Set the features to return.

features = [VisualFeatureTypes.categories, VisualFeatureTypes.description, VisualFeatureTypes.tags]

# Analyze the local image.

try:

result = client.analyze_image(image=image_data, visual_features=features)

=======

def generate_caption(image_file, subscription_key):

from azure.cognitiveservices.vision.computervision import ComputerVisionClient

from msrest.authentication import CognitiveServicesCredentials

from PIL import Image

from io import BytesIO

client = ComputerVisionClient(ComputerVisionClient.SUBSCRIPTION_KEY_ENDPOINT,

CognitiveServicesCredentials(subscription_key))

image_stream = open(image_file, 'rb')

image = Image.open(image_stream)

image_bytes = BytesIO(image)

analysis = client.analyze_image(image_bytes, ComputerVisionClient.DESCRIPTION)

return analysis.description.captions[0].text

=======

def generate_caption(image_filename):

import os

import json

from azure.cognitiveservices.vision.computervision import ComputerVisionClient

from msrest.authentication import CognitiveServicesCredentials

from PIL import Image

from io import BytesIO

import sys

import requests

# Set the environment variables for your subscription key and region

# For example, for US West

# "COMPUTER_VISION_SUBSCRIPTION_KEY=<subscription key>"

# "COMPUTER_VISION_ENDPOINT=https://westus2.api.cognitive.microsoft.com/vision/v2.0"

# For example, for China East

# "COMPUTER_VISION_SUBSCRIPTION_KEY=<subscription key>"

# "COMPUTER_VISION_ENDPOINT=https://eastasia.api.cognitive.microsoft.com/vision/v2.0"

# Set your subscription key and region

subscription_key = os.environ['COMPUTER_VISION_SUBSCRIPTION_KEY']

endpoint = os.environ['COMPUTER_VISION_ENDPOINT']

# Set your image path

image_path = image_filename

# Set your region

region = "eastus"

# Create an instance of the Computer Vision client

computer_vision_client = ComputerVisionClient(endpoint, CognitiveServicesCredentials(subscription_key))

# Load the image from disk

with open(image_path, 'rb') as image_file:

image_content = image_file.read()

# Add the image to the list of images to analyze

image_list = [image_content]

# Make the analysis asynchronous

analyze_result = computer_vision_client.analyze_image_in_stream(image_list, visual_features=['Categories', 'Description', 'Color'])

# Get the analysis result

analysis = analyze_result.result()

# Display the image captions

print("\nImage captions:")

for caption in analysis.description.

=======

def generate_caption(image_path):

# load the image

image = open(image_path, "rb").read()

# encode the image into base64

image = base64.b64encode(image).decode("utf-8")

# create a dict with the image

body = {"image": image}

# create a dict with the subscription key

headers = {"Ocp-Apim-Subscription-Key": "0d5a5f7f1e534d05a8e8d6f9e6e9c6d3"}

# create a url for the api

url = "https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Description,Tags"

# send the request

response = requests.post(url, headers=headers, json=body)

# get the response

response.raise_for_status()

# get the caption

caption = response.json()["description"]["captions"][0]["text"]

# return the caption

return caption

=======

def generate_caption(image_path):

from os import environ

from os.path import join

from urllib.parse import urlparse

from urllib.parse import parse_qs

from http.client import HTTPSConnection

from json import loads

from base64 import b64encode

# Load the image into a byte array

image_data = open(image_path, "rb").read()

image_data = b64encode(image_data).decode('utf-8')

# Read the subscription key from the environment variables

subscription_key = environ["AZURE_SUBSCRIPTION_KEY"]

# Define the vision API endpoint

vision_base_url = "https://westus2.api.cognitive.microsoft.com/vision/v2.0/"

# Set the vision API endpoint to analyze the image

vision_analyze_url = vision_base_url + "analyze"

# Set the parameters

parameters = {'visualFeatures': 'Categories,Description,Color'}

# Prepare the request

headers = {'Ocp-Apim-Subscription-Key': subscription_key,

'Content-Type': 'application/octet-stream'}

params = parse_qs(urlparse(vision_analyze_url).query)

params['visualFeatures'] = 'Categories,Description,Color'

params['language'] = 'en'

# Send the request

conn = HTTPSConnection('westus2.api.cognitive.microsoft.com')

conn.request("POST", vision_analyze_url, image_data, headers)

response = conn.getresponse()

data = response.read()

# Load the JSON response into a Python dictionary

parsed = loads(data)

return parsed

=======

def generate_caption(image_path):

"""

Generate a caption for an image.

:param image_path: path to an image

:return: the caption

"""

# Load the image

from os.path import isfile

from PIL import Image

import requests

if not isfile(image_path):

raise FileNotFoundError(image_path)

image = Image.open(image_path)

# Encode the image

image_data = open(image_path, "rb").read()

# Computer Vision parameters

params = {'visualFeatures': 'Categories,Description,Color'}

# Computer Vision API URL

url = "https://westus.api.cognitive.microsoft.com/vision/v1.0/analyze"

# Connect to Computer Vision

headers = {'Ocp-Apim-Subscription-Key': 'd3f3e1b8e7e14d0b9d7d3f8c8f7e0b6f', 'Content-Type': 'application/octet-stream'}

response = requests.post(url, headers=headers, params=params, data=image_data)

response.raise_for_status()

# Load the response as JSON

analysis = response.json()

# Extract the word tags

categories = [(category['name'], category['score'])

for category in analysis['categories']]

print('Tags:')

for category in categories:

print(category[0])

# Extract the description

descriptions = [(description['text'], description['score'])

for description in analysis['description']['captions']]

print('Descriptions:')

for description in descriptions:

print(description[0])

# Display the color

try:

dominant_color = analysis['color']['dominantColorBackground']

except:

dominant_color = 'N/A'

print('Dominant color: {}'.format(dominant_color))

# Display the caption

caption = '{}'.format(

=======

def generate_caption(filename):

import os

import sys

import json

import requests

import cognitive_services_keys

import cognitive_services_key_names

# create the header for the request

headers = {'Ocp-Apim-Subscription-Key': cognitive_services_keys.get_key(cognitive_services_key_names.BING_KEY)}

# the URL for the bing endpoint

bing_url = 'https://api.cognitive.microsoft.com/bing/v5.0/images/search'

# the parameters for the request

params = {'q': 'image', 'count': 1, 'offset': 0, 'mkt': 'en-us', 'safeSearch': 'Moderate'}

# read the contents of the file

with open(filename, 'rb') as f:

data = f.read()

# build the request

request = requests.post(bing_url, headers=headers, params=params, data=data)

# check for errors

if request.status_code != 200:

print('Error code: {}'.format(request.status_code))

print(request.text)

sys.exit(1)

# process the response

response = json.loads(request.text)

if 'value' in response:

caption = response['value'][0]['name']

print('The caption is: {}'.format(caption))

else:

print('No caption found.')

=======

def generate_caption(image_path):

import os

import json

import requests

subscription_key = "e9e9c8a8d1d9400e8d0b7f2d8f8e7c6e"

assert subscription_key

vision_base_url = "https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/"

analyze_url = vision_base_url + "analyze"

image_data = open(image_path, "rb").read()

headers = {'Ocp-Apim-Subscription-Key': subscription_key,

'Content-Type': 'application/octet-stream'}

params = {'visualFeatures': 'Categories,Description,Color'}

response = requests.post(

analyze_url, headers=headers, params=params, data=image_data)

response.raise_for_status()

analysis = response.json()

print(analysis)

image_caption = analysis["description"]["captions"][0]["text"].capitalize()

return image_caption

|