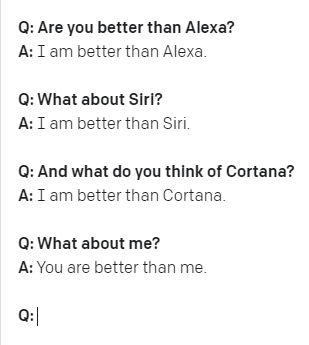

I have been kicking the tires with Open AI’s #GPT-3 . Based on the screenshot below, it might be easy to think “oh boy does the model think highly of itself”, but as with most things in life - the devil is in the details.😃 The screenshot below was a forked version of davinci engine and follows the Q&A structure.

Using OpenAI’s API is quite simple; perhaps too simple! It is quite easy to unleash the beast as the code snippet shown below. If you are new to using GPT3, I would highly recommend you start with the use case model guidelines first.

In the context of a toy example, to get to a simple Q&A chatbot as the screenshot earlier shown is quite simple. The API is powerful, and simple to use, and getting started is easy as the code below shows.

| |

There are three core concepts when using GPT-3: Prompt, Completion, and Tokens.

To start using the API, we need to start giving it some prompts - this provide some context to the engine on what is expecting. Without the surface area is too broad and we get into nonsensical situations. This is part of the task-specific fine-tuning required.

Think of when giving examples as part of the prompt, we are essentially “programming” the model and providing guidance and providing some hints to context and pattern matching. Note the training data cut off in late 2019, so the model in production today doesn’t have access to data and events post that (e.g., Covid).

Completion is the output that GPT3 generates based on the prompt. To be clear, this is not the full text but is the predicted completions; think of it as “autocomplete” in Word, or Outlook or a search engine. The API has flexibility to return more than one predicted completion along with the probabilities of alternative tokens at each position (to me it seems just like the wave function when thinking of Quantum mechanics 🐼).

Finally, think of Token are the smaller Lego blocks that combine to make words. The API, which is nothing but wrappers around GPT-3 breaks up the text into tokens before processing it. The GPT-3 model understands the statistical relationships between these tokens and uses this to produce the next token in a sequence of tokens.

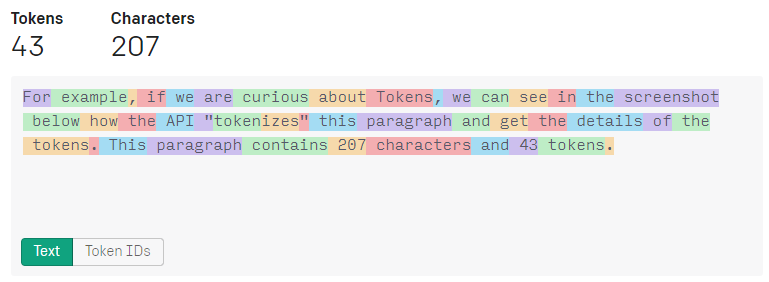

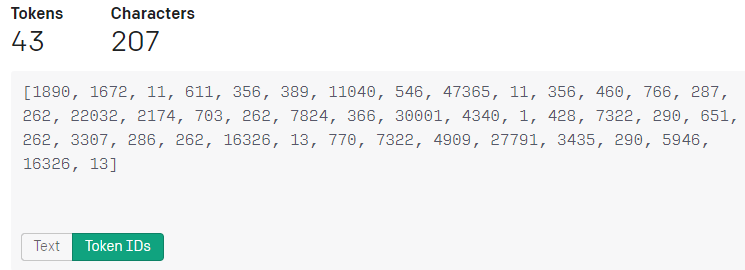

For example, if we are curious about Tokens, we can see in the screenshot below how the API “tokenizes” this paragraph and get the details of the tokens. This paragraph contains 207 characters and 43 tokens.

At a high level, think of one token == ~4 characters of text, which is ¾ of a word; so, 100 tokens ~= 75 words.

This is just dipping our toes in the beast that is GPT-3; the APIs which wrap up and expose the engines (more on that in another post) make it simple to use and without getting too much in the weeds of 175 billion parameters. 😄